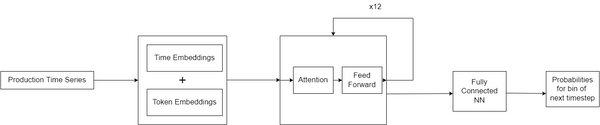

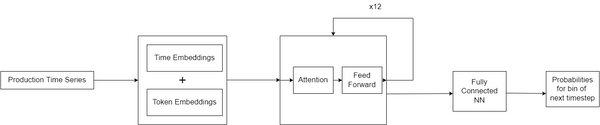

This article proposes a method for significantly faster Arps decline curve fitting on large datasets using TensorFlow on GPUs. It tackles the limitations of traditional approaches, which rely on iterative curve fitting, like SciPy's curve_fit, applied to each data point one at a time. By leveraging TensorFlow's parallel processing